For any online business, organic traffic is worth its weight in gold.

This is especially true for e-commerce businesses that rely on a steady flow of traffic for growth.

Being open for business does not guarantee customers or success.

In a crowded online space, a robust marketing strategy is a must to attract, convert, and retain potential customers.

Therefore, when an e-commerce business in the CBD niche approached us to increase traffic through organic search to its site, we leveraged our expertise to deliver results.

CBD is a tough niche to crack for those who don’t know, especially with the recent Google updates and FDA regulations. It has become increasingly difficult to rank and maintain the rankings.

Based on this CBD SEO case study, we not only maintained but also increased their overall traffic in these past 12 months.

Here is a snapshot of what we’ve accomplished for our client in 12 months:

We did a complete audit of the client’s site and identified 12 key areas needing improvement across all SEO segments; off-page, on-page, and technical.

After twelve months, our changes increased traffic to the client’s website from 2k traffic/month to 20k traffic/month.

In the following sections, we explore each action taken by us, why it was important from an SEO perspective, and how it improved the performance of the client’s site.

Optimizing for On-Page SEO:

Quick overview: On-page SEO is how websites optimize their pages to improve user experience and ranking on SERPs.

Here’s how we optimized for on-page SEO:

1. Planning out a content calendar

Content is often central to a site’s marketing efforts, especially when generating inbound leads, traffic, and growing a business.

And content calendars play an integral role in this process.

Having a schedule of what, when, and how often new content is posted helps businesses stay organized and have an overview of strategy in one place.

It also helps ensure that all new content is optimized for search engines.

We immediately noticed that the client’s content effort was uneven.

On their blog, new content was being published with not much consistency.

To fix this, our team created a six-month data-driven content calendar with the help of keyword research.

Here is what it looks like:

We first identified MoF (middle of the funnel) and BoF (bottom of the funnel) keywords the client’s target audience was most interested in, using data such as monthly search volume, keyword difficulty, and looking at the SERPS to attract potential customers.

The reason for choosing MoF and BoF keywords was to first go for keywords with some commercial intent and easy to rank. This ensured we had some quick wins early on while increasing their bottom line growth.

Also, the publishing frequency was increased. The client was publishing 5-7 posts every month. We increased that to 30 posts every month. This frequency was followed religiously.

Why did we do this?

We basically created a six-month calendar in advance and front-loaded all our main posts in these months. So instead of creating and publishing these posts over 1 year and then waiting for Google to start ranking them, we published them in the first six months so we can begin ranking a bit sooner.

For each post, we prepared a content outline for the writers. The content contained the following information:

- SEO optimization checklist

- Main keyword

- What the URL slug will be

- Related keywords to add

- Relevant internal links to add

- Headings and subheadings for the content

This way, the content writers know exactly what to do without knowing how SEO works. Their work was just to write better content, including the guidelines we had provided.

Note: We continued this practice after 6 months and again prepared a content calendar for the next 6 months.

2. Fixed thin content issues

Thin content is any information that provides little to no value to users, or the overall word count isn’t much for a post where an in-depth guide is required.

For example, thin content could be a 500-word blog post that lacks depth or a comprehensive 2,000-word blog that does not satisfy search intent.

It all depends on what the user intent is for a specific keyword.

For our client, we found that their blog posts had no proper structure, such as subheadings that Google recommends to provide structure and improve readability.

Similarly, the client did not incorporate the focus or related keywords in subheadings, the title of posts, or the copy.

Google’s algorithm prioritizes content that meets its requirements to ensure it can return the best results to searchers. Without keywords to help it understand the page, it would be difficult to rank it.

Hence, we updated the client’s old posts to ensure each article was well optimized with relevant subheadings, main, and related keywords. The content was broken into chunks to improve readability.

The URL structures were improved. Meta title and description were updated of the posts with recommended Google character limit.

In some cases, posts were updated with additional content where the intent was informational and required in-depth knowledge.

3. Optimized the URLs

Although URL length does not directly impact SEO, shorter URLs correlate with better rankings.

Why?

A shorter URL (<80 characters) provides a better user experience and is easier to scan and read.

This drives click-through rates, which can boost rankings.

For example, which link is more enticing to click?

- Option A – xyz.com/blog/how-to-promote-a-post

- Option B – xyz.com/blog/19592

For most people, option A will be the obvious choice.

A short URL also makes it easier for search engines to determine context.

But a short URL alone isn’t enough. Certain best practices must be followed to ensure better performance.

For example, the URL should include the page title or focus keyword.

Main Keyword: CBD Flower

URL: xyz.com/collections/cbd-flower

Besides an SEO boost, another benefit of shorter, cleaner URLs is that they can be shared on social media, email campaigns, and other marketing channels.

With this knowledge, we analyzed the client’s URL profile, and all instances of lengthy links were replaced with the page’s main keyword.

4. Resolved keyword cannibalization

Keyword cannibalization is when more than one page on a site competes on SERPs for the same keyword or phrase.

It’s an easily overlooked SEO issue that affects websites.

When multiple pages on the same website compete, it lowers the overall click-through rate and affects the conversion rate.

How?

Rather than a single authoritative post, sharing similar content across pages dilutes performance.

For example, let’s say a website writes two blogs using the same keyword. This will split incoming traffic, and unless both posts satisfy user intent equally, the page that performs worse will lower overall ranking potential.

But that’s just part of the headache.

Keyword cannibalization can also confuse Google and lead it to rank the URL a business did not want to rank for that keyword.

Alternatively, it can lead to instances of different URLs appearing on SERPs. In both situations, the business has little control over the outcome.

For eCommerce businesses with extensive inventories and similar product names, keyword cannibalization can quickly become an expensive problem.

To spot keyword cannibalization, a variety of tactics are available.

One of the most comprehensive options is to perform a content audit. This includes grouping similar content, analyzing traffic, impressions, metadata, and more to visualize potential cannibalization issues.

Here is what we did for the client:

For existing content: We applied 301 redirects on underperforming content to redirect visitors to a better version of similar content.

For new content: For content that we produced, we first compiled a list of all the keywords from Ahrefs related to our niche and used a keyword clustering tool to properly group keywords with similar intent and avoid cannibalization.

5. Updated internal link structure

Besides inbound links, internal links or links that send users from one page to another on the same website are an extremely powerful ranking factor.

Why?

They make it easier for search engines and users to find what they’re looking for.

For search engines, internal links also help web crawlers find a page online, speeding up indexing (more on this later).

Remember, algorithms are far from perfect.

Helping them understand the content of a page, its relationship to other pages on a site, and its overall importance provides an SEO boost.

Moreover, internal links improve the user experience.

By anticipating other content, a visitor may be interested in while on a page, not only can a business keep customers on its site for longer, it builds trust and loyalty and can drive sales by getting users to engage in specific actions.

Hence, a website’s structure in terms of navigation between pages and grouping similar content is essential from an SEO perspective.

A review of the client’s site architecture revealed an overhaul was required.

The process was broken down into several steps.

First, we made a list of all the posts on the site.

This information was then used to group similar posts, and internal links were added across all the posts.

Here’s an example:

Is CBD Legal In Us

is CBD legal in Florida

is CBD legal in Utah

is CBD legal in Arizona

is CBD legal in North Carolina

is CBD legal in Minnesota

is CBD legal in Wisconsin

is CBD legal in Mexico

is CBD legal in New York

is CBD legal in Hawaii

6. Optimized product pages

Product pages are the ‘shelves’ of online selling.

It’s where products are advertised.

These pages must be optimized not only for Google but also for visitors to convince them to become buyers.

Examples of great product pages feature high-quality images and informative content about the product.

Including content on product pages helps educate visitors and guides them in their decision to make a purchase.

But content alone is not enough.

Often, the first touchpoint between potential customers who already know what they want to buy is on SERPs and not the website.

Therefore, how a brand presents itself in terms of metadata (title and meta description) on SERPs can make the difference between a click or skip.

Ideally, product page titles and meta descriptions should answer customer pain points and share unique selling points.

After a review of the client’s products pages, we noticed a severe lack of content and proper use of metadata.

As a result, all product pages were updated to include optimized content and metadata to rank and boost traffic from SERPs.

This resulted in the product pages ranking far better on Google than they previously were.

7. Optimized collection pages

In eCommerce, collection pages display multiple products in one place.

Customers spend the bulk of their time browsing collection pages, so it’s crucial to ensure they are optimized for conversions.

A review of the client’s collection pages revealed several areas needing improvement.

First, like in the case of product pages, the collection pages lacked content.

Content is an opportunity to describe what’s in the collection. This sets the stage and helps customers understand the products they see.

The client’s collection pages were also missing metadata like titles and descriptions.

Ecommerce stores must be careful when creating collections. These pages should have a structure and filter options, allowing customers to navigate the website easily.

More importantly, businesses should include collection titles to help customers understand the page.

This is not always straightforward and requires an understanding of customers.

Like with product pages, we updated collection pages where necessary. After these changes, the client ranked on the first page for some of the collection pages.

Optimizing for Off-Page SEO:

Besides on-page issues, we also reviewed the client’s off-page SEO efforts.

Quick overview: Off-page SEO are the actions businesses implement outside their site to improve ranking on search engine result pages (SERPs).

For an e-commerce business, this translates to more visibility and sales.

An integral part of off-page SEO is backlinks, a vote of confidence from relevant websites to yours.

After an audit of the client’s site, these are the off-page SEO improvements we applied:

8. Reduced use of exact match anchor text

Anchor text is the clickable words within a hyperlink. These phrases help search engines understand the context of a link and navigate the web.

In the early days of SEO, anchor text was a ranking factor. Google considered it a signal that helped its search engine understand the context of pages.

But with the Penguin update in 2012, Google began penalizing websites for overuse of exact match anchor text as websites were manipulating its use for rankings.

What is an exact match anchor text?

It’s the use of the exact keyword or phrase in a link that the website wants to rank for.

For example, there’s a website trying to rank for the primary keyword ‘CBD Oil’ and build backlinks on several websites with the anchor text of ‘CBD Oil.’ This lets Google know that you’re trying to manipulate the SERPs.

Google does not have a problem with exact match anchor text per se, but there’s a fine line between fair use and manipulating the algorithm with keyword stuffing.

This is precisely what happened with our client. The majority of their backlinks were built with exact match anchor text due to which they experienced a decrease in their traffic and rankings.

Therefore, we recommend limiting the use of exact match anchor text in backlinks to between 1-5% per post.

Most of the previous anchor texts were changed by the client, and the new backlinks which we built had a mix of these types of anchor texts:

- Exact Match (Only 1-5%)

- Partial Match

- Branded (Initially, we built most of the backlinks on branded terms)

- Generic

- Naked link

9. Removed low-quality backlinks

Not all backlinks are created equal.

Low-quality backlinks from previously penalized sites or sites with abrupt traffic changes can negatively impact another site.

As part of its quality guidelines, Google may lower the rankings of a page or site with no history of shady SEO practices for a backlink from a source previously penalized.

But it’s not only backlinks from penalized sites a site has to be careful of.

Backlinks from websites with little to no traffic or low-quality/irrelevant backlinks on their site can also be damaging.

Since these websites contribute little to the overall user experience, it’s better to eliminate such backlinks altogether.

Note: I know it’s a controversial topic in the SEO world, but we only disavow links if the websites in question are not relevant to our niche, or it is a PBN, or it has a spammy backlink profile itself.

It’s important to note that backlinks from sites irrelevant to your niche are also considered low-quality.

In the case of the CBD niche, a link from car insurance or a travel site makes little sense and does more damage than good.

Remember, Google values relevance.

Therefore, we did a site-wide backlink assessment to identify and disavow low-quality links.

To do this, an internal filter was created after a discussion to remove links that did not meet certain conditions.

Here are the filters we used:

- All referring domains must have at least 500/visitors per month

- Backlinks must be from relevant domains for at least one-year-old

- Backlinks must be from sites with no previous history of drastic traffic spikes, especially a decrease in traffic

- The website must have a healthy backlink profile

10. Built high-quality guest post backlinks

As we worked on fixing the exact match anchor text issue, we started building new backlinks by guest posting on different relevant websites.

We exported all the relevant websites from Ahrefs and did a manual email outreach using Lemlist. We offered to write a high-quality 1000 words guest post on their blog.

The key isn’t to do the above method and expect great results. Anyone can do that.

See here what ‘most’ people do:

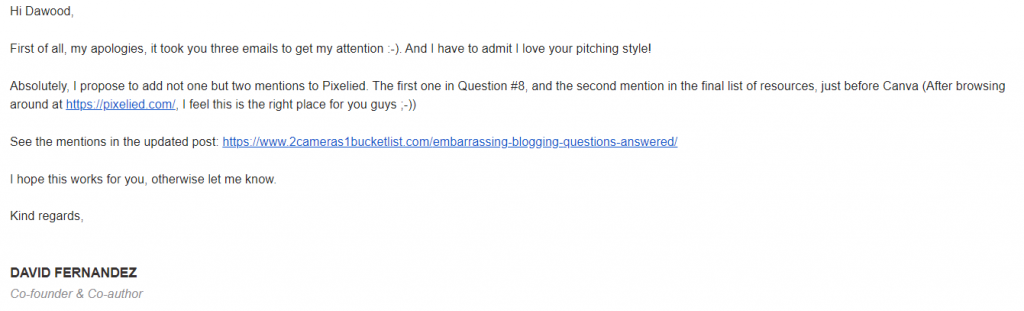

Instead of doing ‘Dear Sir/Madam’ and spammy-looking emails, this is what we did for one of our sites:

Here’s the response we got: (Not one but TWO mentions 😁)

Here’s an in-depth step-by-step guide on how to acquire backlinks from reputable and high authority websites using personalization in your email outreach.

Due to our methods and techniques, we were able to get placements on some of the most reputable websites.

You’re bound to come across many sites that charge for link insertion in the CBD niche. Some of the client’s backlinks were built at no cost but some were acquired for a price.

But we only approached those websites that met these criteria:

- All referring domains must have at least 500/visitors per month

- Backlinks must be from relevant domains at least one-year-old

- Backlinks must be from sites with no previous history of drastic traffic spikes, especially a decrease in traffic

- The website must have a healthy backlink profile

- The website must not be a PBN

Optimizing for Technical SEO:

Quick overview: Technical SEO refers to optimizations that help search engines crawl and index the site faster and improve user experience.

After an audit of the client’s site, we made the following technical changes:

11. Improved core web vitals

Web vitals is a term coined by Google to help websites provide the best user experience.

It can be divided into two categories: core web vitals and non-core web vitals.

Core web vitals covers three metrics linked to page speed and user interaction, but the two most important ones are the largest contentful paint (LCP) and cumulative layout shift (CLS).

For best results, every page on a website should be optimized for core web vitals.

But that does not mean other web vitals such as mobile friendliness should be ignored, as they also improve user experience.

We analyzed the client’s website and noticed its layout and content were not optimized for mobile users, despite mobile being the largest traffic source.

The site’s in-house developers were roped in for a redesign of the site with a new light-weight theme without all the bells and whistles.

The website pages and post were being delivered from Cloudflare CDN which made the site load faster.

Next, the CSS and Javascript (JS) files on which the website was built were compressed or minified by the developers. A smaller file size means faster downloads and a reduced time for the file to be read.

Last but not least, unnecessary elements from the site’s theme were removed to reduce bloat.

All these changes immensely helped load the pages faster and eventually passed core web vitals and mobile experience for all the landing pages.

12. Fixed 404 errors

Unless you’re new to the Internet, it’s rare not to have experienced a 404 error.

These errors appear when a user tries to access a page that has either moved or been deleted without any redirect or links to help users navigate elsewhere.

From an SEO perspective, this hurts rankings.

First, it’s a bad user experience. After a 404 error, most users leave the website entirely, searching for an alternative elsewhere.

In the world of eCommerce, this means losing customers to competitors.

Users leaving a website also increases the bounce rate, which is a negative signal that leads to Google lowering your rankings.

Additionally, if Google picks up on multiple instances of users being unable to access a link, it could de-index the page. This means it will never show up on SERPs.

Therefore, we analyzed the client’s site server log for a period of 2 months to ensure if any landing pages had 404 errors.

A server log file can tell you A LOT about your website because it is accurate data coming from Google’s own crawlers.

Here’s what we found:

As you can see on the bottom right of the image, the website had a WHOPPING 1800+ 404 links from the log file analysis. This means that the Google crawl bot had crawled all these pages and saw the 404 status code.

Having such a high number of errors erodes your authority and credibility in the eyes of a search engine.

Why would Google rank your content if a significant chunk of your site is not working? From their perspective, you’re an unreliable source to satisfy user intent.

Just fixing these URLs significantly increased the website’s organic traffic.

Contact us here if you would like to conduct a thorough server log file analysis for your website. You’d be surprised to know the number of errors and issues on your site.

13. Resolved crawling issues

Crawling is how search engines find, understand, and categorize the vast catalog of content on the web.

A web crawler is an automated program that constantly scours the web for both old pages and new content.

A successful crawl translates to the page being indexed, which is essential for ranking. A page that has not been indexed will not show up on SERPs, no matter how great the content is.

However, if the crawler encounters an error, it will skip the page without indexing it.

To maximize its efficiency, each website is allocated a ‘crawl budget’, or the number of pages the crawler will analyze on the site on a given day.

Ideally, websites should be optimized to help crawlers do their job.

The site’s crawl budget will be wasted if the crawler comes across mostly problematic pages. More importantly, fewer indexed pages mean Google will not prioritize the site on SERPs.

But not every page has to be indexed, especially in the case of a large eCommerce site.

There are certain scenarios where it’s better if an eCommerce business de-indexes or no-indexes a page.

For example, a website may have a separate landing page promoting the same products, or there may be two product pages selling the same item, with minor differences in color and size.

In such situations, saving the crawl budget for more important pages you want to rank on SERPs makes sense.

To tell Google to skip a page, simply add a ‘noindex’ metatag to the webpage.

Upon a review of the client’s site, we noted many irrelevant pages should be no-indexed.

In response, the ‘noindex’ directive was added to all these pages so that Google could focus on crawling pages deemed necessary.

Conclusion

That’s it for this CBD SEO case study! I hope you liked the in-depth guide.

SEO is both an art and science.

Sometimes, minor fixes go a long way, but a major overhaul is often required to improve performance on SERPs and conversions.

Investing in SEO can be the difference between going bust and success for an eCommerce business.

But SEO can be complicated, with many nuts and bolts to understand.

That’s why it’s recommended to seek the help of experts with technical knowledge and a history of delivering results, such as Retent Marketing.

If you’re an eCommerce business looking for SEO help, don’t hesitate to contact us.